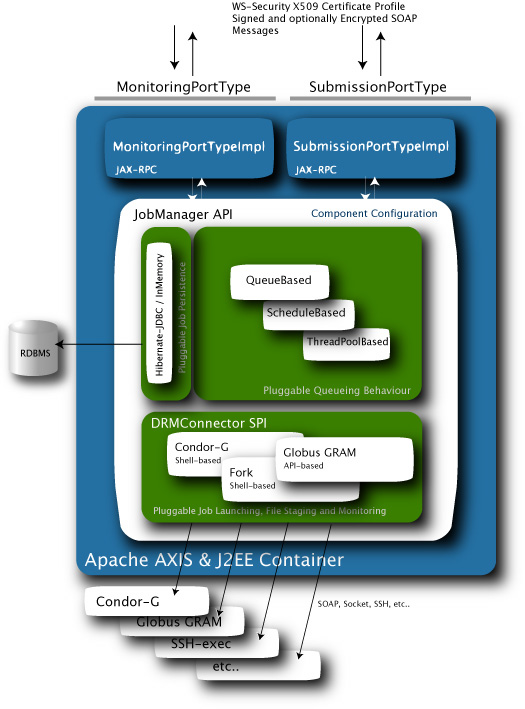

GridSAM consists of several subsystems working together to support pluggable job persistence, queueing, job launching, file staging and failure recovery. Each subsystem has a set of well-defined interfaces. They are collectively known as the Service Provider Inteface (SPI) contrary to the Application Provider Interface (API) used by client who demands job submission functionality. The SPIs and the APIs are discussed in this document in more details.

The JobManager interface is the main entry point to the GridSAM system. This interface SHOULD be used by the embedding system (e.g. a Web Service, Portal, command-line) to interact with the job submission and monitoring functionalities provided by the sub-systems. Instances of JobManager can be built by using one of the JobManagerBuilder implementations depending on your need. Configuration details are discussed further in the User's Guide.

The JobInstance interface is a view to the state of a submitted job. It provides operations for inspecting the history of a submitted job and the corresponding properties. All properties are immutable.

The Service Provider Interfaces (SPIs) represent pluggable points in the GridSAM sub-systems. This is hidden from the actor requiring job submission and monitoring capabilities. Composition of the components implementing the SPIs collectively provide the backbone for the JobManager API. Determining the optimal composition of different implementations of the SPIs is the role of the system administrator in the case of the GridSAM Web Service, or the developer of the embedding system using the GridSAM API.

The JobInstanceStore interface represents the transactional storage of MutableJobInstance objects.

Each job submitted through the JobManager API is is represented by a MutableJobInstance instance. Throughout the submission pipeline, the MutableJobInstance instance would be modified (e.g. state change) and persisted.

The main implementation HibernateJobInstanceStore abstracts the object-to-relational mapping through the use of the Hibernate library. The implementation provides various integration mode with JDBC compliant databases. The default usage is an transient in-memory JDBC database backed by an embedded Hypersonic SQL server. Other modes include file-based embedded database or networked database access through a javax.sql.DataSource instance (object injection or JNDI lookup).

Note: Hibernate is a powerful object/relational persistence and query service for Java. It supports most JDBC compliant transactional databases.

The default JobManager implementation ( DefaultJobManager) uses the Quartz task scheduler library for thread-pooled immediate/timed-trigger execution of queued job.

When a job comes into the DefaultJobManager instance, the job is stored as an instance of MutableJobInstance by the configured JobInstanceStore component. The job instance is then scheduled to be passed to the DRMConnector pipeline. The wrapped Quartz Scheduler instance would be used to schedule the job controlled by the chosen Trigger (e.g. cron-like timed execution, immediate thread-pool based execution).

Note: Quartz is an open-source task scheduling system that can be integrated with, or used along side J2EE or J2SE application. It can be used to create scheduled execution of Java high-number of tasks. The persistence mechanism provides recoverable execution of scheduled jobs.

The DRMConnector interface represents the job launching pipeline. It is one of the most important extensible component in the GridSAM architecture designed for integration with different Distributed Resource Management (DRM) system.

To encourage re-use of job launching functionality, many common functionalities are implemented as a DRMConnector (e.g. file staging, diagnostic, logging). the MultiStageDRMConnector class provides the scheduling capability of chaining DRMConnectors together to form a pipeline. The org.icenigrid.gridsam.core.plugin.connector package contains useful DRMConnector implementations to be composed into a multi-stage pipeline.

For example, File staging is represented as a stage in a DRMConnector pipeline. GridSAM utilises the Apache Virtual File System library for handling diverse file system resources (e.g. FTP, SFTP).

Some DRMConnector implementation might require long-running monitoring of jobs queued by the underlying DRM. A set of scheduling methods are exposed to the DRMConnector for scheduling periodic tasks. This is used by the Condor implementation for example to periodically poll of the underlying Condor system for synchronising job status. DRMConnector can also insert properties specific to the DRMConnector into the MutableJobInstance, this allows co-operating components in the pipeline to share state specific to the job.

The most primitive form of job launching is forking a process locally on the same machine as the residing GridSAM instance. This is achieved by chaining the StageInStage, ForkStage and StageOutStage encapsulated in a MultiStageDRMConnector .

The ForkStage implementation in particular uses the auxiliary Shell API for forking process. Two implementations are provided with GridSAM for performing local or SSH POSIX shell forking. Therefore the forking DRMConnector pipeline can fork job locally or remotely. The Shell API is also used by other DRMConnector, such as Condor, to integrate with the DRM-specific command-line tools.

The Job Persistence sub-system provides persistence for job state and properties inserted during the execution of the DRMConnector pipeline. This provides long-term storage for job state, which can be used for DRM-specific recovery routine to recover information associated with the job (e.g. DRM specific job id, which can be used by the DRM integration code to query state).

When a job fails to launch due to a server crash in the middle of a running pipeline. When the server is restarted, the underlying Quartz Scheduler configured as durable will be restarted automatically from the persistence store, and re-execute the launching pipeline for the given job. It is the responsibility for the individual DRMConnector in the pipeline to handle the recovery, because of the diversity of recovery semantic in the underlying DRM system.

The API binds together components provided by implementations of the various SPIs as discussed. The Builder pattern is adopted in GridSAM to create instance of JobManager. To enable the dynamic composition of the configurable constituents, Apache Hivemind is used as a microkernel for expressing the the configuration and the composition of the components. The HiveBasedJobManagerBuilder bridges the API and Apache Hivemind.

A GridSAM configuration is a set of Hivemind XML documents describing the service-point (object declaration), contribution (service-point properties) and configuration-point (schema definition of contribution format).

The documents describe registered services (components) typed by the service interface, and the implementation attached to the service. The implementation is instantiated as described by the JavaBeans properties (set/get) in the configuration document. Services can collaborate with each other via dependency injection.

GridSAM sub-system accesses required services (components) by asking for an implementation of a given interface through the hivemind Registry available in the pipeline context. For example, the ForkStage code would ask for an implementation of Shell for forking jobs. Pre-defined configuration for instantiating a JobManager instance for embedded-use of local/ssh shell execution can be found in GRIDSAM_HOME/src/java/org/icenigrid/gridsam/resource/config/*.xml.

Hivemind also manages the availability and lifetime of services available through the Registry. Services can be pooled, thread-pooled, made singleton, etc. as described in the configuration. When the Registry is being shutdown (e.g.. VM exit, web-application undeployed), the services would be notified for cleaning-up resources.

System administrators and developers are encouraged to consult the Hivemind tutorial for further information on the flexibility (and the inherent complexity) of Hivemind.